Improving Patient Outcomes Through Preparedness And Efficiency

As medical procedures increase in complexity – and the time to complete them decreases – augmented reality is the right tool at the right time for team-based training and surgery.

As light-based tools and technologies advance, their burgeoning applications are reshaping many aspects of our public and private lives, especially in healthcare. Consider the accelerating advancements in AI, robotics, augmented reality (AR) and mixed reality, headsets, holography, displays, and 3D imaging. Their influence on the health and medical professions have led to positive changes in training programs, surgery preparation, and in open surgery that include better optical systems, enhanced visualization, and with the addition of high-definition and 3D modeling offered by AR and virtual reality (VR), better patient outcomes.

AR and VR in Medical Training

Not yet commonplace in the OR, assistive technologies such as collaborative AR and immersive VR support both med students and practicing professionals in training or pre-op environments. They enhance standard 2-dimensional static or video images with customized software and 3D modeling of human bodies, systems, and organs. The modeling can be viewed via headsets or large flat screen displays. Anatomy, for example, can be taught to one person or a team equipped with individual headsets, each projecting floating but responsive holograms that respond to touch and voice. Either by wearing a headset or utilizing enhanced monitors and displays, practitioners have complete access to all the body’s organs, tissues, and systems to peer around and inside of, as well as various digital guides that teach and respond in real time to the user’s needs and actions.

AR training enhances educational environments and enables medical professionals to enter their practices supported by tools that blend physical and digital realities. These tools enable care providers to work together more efficiently to perform precise diagnoses, treatments, and improved outcomes.

AR and VR: A Real-Time Surgical Support Tool

AR-enhanced surgery can be achieved via headset or screens that utilize 3D holograms or with endoscopes communicating information displayed on large flat screen displays. Either method involves data and visual overlays. With augmentation, the OR team can simultaneously have hands-free access to patient information, such as health history and allergies, combined with real-time video and 3D overlays of organs and systems. AR can provide views of the anatomy beyond what a surgeon can see with their naked eyes.

Once the surgery begins, a spine surgeon, for example, may see a CT scan overlay for increased precision placement of tools. A surgeon performing a breast cancer operation may be able to see the cancerous cells with an enhanced contrast overlay. In both open and laparoscopic procedures, surgeons can visualize and assess blood perfusions with real-time overlay of IR fluorescence and visible light images.

Optics in AR/VR Systems

AR and ICG fluorescence provide a clear view of a patient’s cerebral anatomy.

Image courtesy of Leica Microsystems.

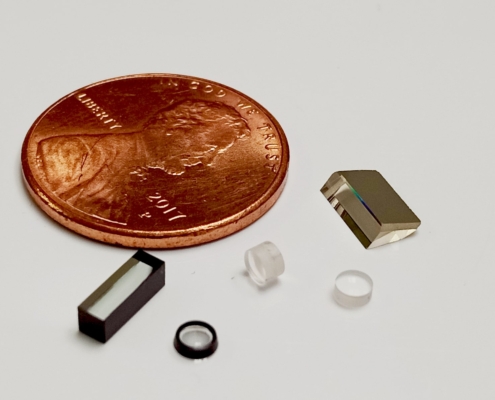

Different types of optics specifically enable high-definition 3D models: 3D imaging modalities capture 3D data for AR image registration, and fluorescence imaging with contrast agents and hyperspectral imaging provide visualization beyond what the surgeon can see.

3D imaging works by capturing data corresponding to the surface height or distance to the camera. This data is represented as a point cloud and combined with 2D data for surface rendering. Fluorescence imaging involves exciting a fluorophore with a short wavelength light source, a specialized filter in the camera blocks this light, and captures the longer wavelength emission. Hyperspectral imaging works by breaking up the standard red, green, blue image into finer spectral samples as well as extending the sensing into the UV and near-infrared range.

As physicians adopt the integration of these light-based tools and technologies, patients are benefitting. For example, patients are becoming more empowered to participate in preventative care through the use of personal biometric devices and clothing, with smart devices and apps that gather and transmit data, and with improved access to telehealth. These changes have the potential to shift prevailing attitudes about health care; no longer is it a system of people and processes that treat sickness, but instead is a system of people and processes that support and help us maintain our health.

If you’re interested in custom optical system design for biomedical applications, contact our team to talk it through.